Riki’s Peer Review Framework

Why Traditional Peer Review Is No Longer Enough

For decades, peer review has served as the gatekeeper of academic quality. While its importance remains undisputed, the traditional process is increasingly being challenged by the demands of a rapidly evolving research landscape. Long delays, opaque evaluation criteria, reviewer bias, and inconsistent feedback often undermine the reliability and efficiency of the review process.

In today’s fast-paced, interdisciplinary, and AI-enhanced academic world, scholars require a peer review model that is faster, more transparent, and better suited for the complexity of modern research outputs. Rigid legacy systems no longer meet the expectations of a new generation of researchers who value speed, clarity, and fairness without compromising academic rigor.

RIKI’s Vision for Peer Review

At RIKI Journal, we are reimagining peer review from the ground up. Our goal is to build a system that retains the core strengths of scholarly critique while enhancing it with the power of artificial intelligence and a fresh commitment to transparency. We envision a model where:

- AI acts as an assistant, offering detailed structural, linguistic, and citation-based analysis of manuscripts.

- Human reviewers focus on the essence of academic contribution: originality, methodology, relevance, and impact.

- Authors receive clear, data-backed feedback with actionable steps for improvement.

- Decisions are made faster, but grounded in a combination of expert judgment and algorithmic insight.

Our mission is simple yet transformative: to bring trust, speed, and clarity back to the peer review process—without losing its soul.

The Philosophy Behind Our Approach

At RIKI Journal, we do not see peer review merely as a technical checkpoint—it is a philosophical commitment to the integrity of knowledge. The traditional peer review process was built on trust, scholarly dialogue, and the communal effort to uphold academic standards. Yet, in an age where information moves faster than ever and interdisciplinary boundaries are blurred, this process demands reexamination.

Our philosophy centers on optimizing—not replacing—the human elements of peer review. We believe reviewers should spend less time on mechanical tasks (such as identifying citation formatting errors or linguistic inconsistencies) and more time engaging deeply with the intellectual merit of a submission. AI enables this shift by offloading repetitive and error-prone tasks, allowing human expertise to flourish where it matters most: judgment, insight, and interpretation.

Peer review, in our model, becomes not only a gatekeeping function but a developmental one—a process that improves research, guides scholars, and contributes meaningfully to academic discourse. It is a partnership between human and machine, built on respect for both precision and perspective.

Our Core Principles: Transparency, Quality, Speed, and Fairness

The foundation of RIKI’s peer review model rests on four non-negotiable principles:

Transparency

Authors deserve to understand how their work is evaluated. Our process includes clear scoring rubrics, AI-generated diagnostic reports, and reviewer feedback that is structured, justified, and traceable. There are no black boxes—only open dialogue.

Quality

Excellence in research is multifaceted. We assess manuscripts not only for logical soundness and originality but also for structural clarity, depth of literature engagement, and relevance to current academic conversations. Both AI and human reviewers are trained to uphold these high standards.

Speed

Quality should never come at the cost of unnecessary delay. By combining machine efficiency with expert oversight, we cut review times dramatically— often from months to days—without compromising rigor.

Fairness

Bias—conscious or unconscious—has long plagued traditional peer review. Our system mitigates this through anonymization, standardized metrics, and AI-supported checks that ensure consistency across submissions, reviewers, and disciplines. Every paper gets a fair chance based on its merit.

These principles inform every step of our workflow, from initial screening to final editorial decisions. They are not aspirational—they are operational.

How AI Enhances the Process—Without Replacing People

The use of AI in peer review often raises a valid concern: are we automating away human judgment? At RIKI, the answer is emphatically no. Our goal is augmentation, not automation.

AI plays a pivotal role in preprocessing manuscripts—evaluating aspects like structure, grammar, reference accuracy, redundancy, and potential plagiarism. It provides reviewers with a comprehensive diagnostic overview, highlighting areas that may require closer attention. This pre-analysis ensures that reviewers spend their time where their expertise matters most: assessing originality, methodology, theoretical grounding, and broader academic value.

Additionally, AI helps surface patterns that human reviewers may overlook: citation gaps, hidden assumptions, or semantic inconsistencies across sections. However, it is always the human reviewers who interpret the AI feedback, validate its insights, and ultimately make decisions.

Our hybrid model preserves the irreplaceable nuances of human evaluation while harnessing the scalability, objectivity, and speed of machine learning. It’s not about taking people out of the process—it’s about giving them better tools.

The RIKI Peer Review Workflow: A Four-Stage Hybrid Model

At RIKI Journal, we believe that academic peer review must be both rigorous and responsive to the evolving needs of contemporary research. Our workflow is not simply a rebranding of traditional systems—it’s a re-engineered process designed to combine the best of human expertise with the analytical precision of artificial intelligence. Below is a breakdown of our four-stage peer review model and the rationale behind each step.

1. Initial Automated Screening (AI-Powered Pre-Review)

The first stage involves a comprehensive AI-driven diagnostic analysis. Rather than assigning a manuscript directly to human reviewers—who often spend a significant portion of their time identifying basic errors—we begin with a structural, linguistic, and citation-based evaluation.

Our proprietary AI system performs tasks such as:

- Detecting grammatical inconsistencies and logical redundancies.

- Assessing the completeness and relevance of citations.

- Identifying potential plagiarism or content duplication.

- Evaluating abstract structure, formatting coherence, and section balance.

Rationale: By automating low-level checks, we free human reviewers to concentrate on deeper intellectual assessments. Moreover, this screening ensures a uniform baseline of quality across all submissions, reducing noise in the peer review process and decreasing reviewer fatigue.

2. Human Reviewer Selection and Matching

Following the AI screening, each manuscript is routed to two to three human reviewers from our diverse global panel. Reviewer selection is based on:

- Subject-area expertise.

- Prior reviewing history.

- Geographic and institutional diversity (to minimize unconscious bias).

Reviewers are granted access to both the manuscript and the AI-generated report, which acts as a decision-support tool rather than a verdict.

Rationale: One of the persistent problems in peer review is subjectivity—different reviewers often focus on different aspects or apply inconsistent standards. By providing a standardized AI diagnostic as a foundation, we enhance the consistency and objectivity of the human feedback process without replacing it.

3. Synthesis of Human and Algorithmic Insights

Reviewers provide structured commentary on key aspects such as:

- Originality and significance of the research question.

- Soundness of methodology and data interpretation.

- Theoretical grounding and relevance to the field.

- Ethical considerations and transparency of reporting.

Simultaneously, the editorial team synthesizes these reviews with the AI diagnostics to create a composite score and an integrated assessment. This synthesis allows for:

- Resolution of reviewer discrepancies using data-driven cues.

- Highlighting patterns that may not be evident from individual reviews.

- Transparent, traceable justification for each editorial decision.

Rationale: Rather than treating AI and human reviewers as separate or conflicting sources, we treat them as collaborative contributors. This synergy enhances the accuracy, fairness, and depth of our assessments.

4. Final Scoring and Editorial Decision

The final decision is made by the editorial board, drawing upon:

- Human reviews (including reviewer confidence levels).

- AI-diagnostic summaries.

- The composite score (ranging from 0 to 100).

- The alignment of the manuscript with RIKI’s editorial priorities.

Each manuscript is assigned a numerical score that reflects both technical quality and scholarly contribution. This score determines whether a paper is:

- Accepted without revision.

- Accepted with minor or major revisions.

- Rejected with constructive feedback.

Authors receive a detailed feedback packet including:

- Reviewer comments.

- Annotated AI report.

- Editorial rationale and final score.

Rationale: Our scoring system does more than determine publication—it provides authors with a transparent, actionable roadmap for improvement, whether they publish with us or elsewhere.

A New Standard for Academic Integrity

By integrating structured automation, curated human expertise, and editorial oversight, RIKI’s peer review process establishes a new standard of accountability and efficiency in scholarly publishing. Our model respects the complexity of academic work while acknowledging the need for speed, consistency, and fairness in evaluating it.

This is not automation for its own sake—it is a strategic rebalancing of labor, attention, and judgment in service of academic progress.

The RIKI Quantitative Review Model: 0–100 Scoring System

At RIKI Journal, we believe that every academic manuscript deserves not only a decision—but a clear explanation. Our quantitative peer review model assigns a final score between 0 and 100 based on a weighted assessment of originality, methodology, writing quality, theoretical grounding, ethical standards, and relevance to the field.

This score is not a black box: it is backed by a detailed review framework that ensures transparency, fairness, and consistency across disciplines.

Why We Use a Numerical Score

- Clarity for Authors: Authors receive a clear understanding of where their manuscript stands and how to improve it.

- Transparency for the Community: Our scoring helps build trust in the decision-making process.

- Comparability Across Submissions: Scoring enables consistent standards across disciplines.

- Actionable Feedback: Each score comes with a rationale and pathway forward.

RIKI Peer Review Scoring Table

| Score | Rating Title | Evaluation Summary |

|---|---|---|

| 21 | Fundamentally Flawed | The manuscript demonstrates major issues in methodology, logic, and/or ethical standards. It lacks theoretical grounding and fails to meet basic academic expectations. Not suitable for revision without full reworking. |

| 43 | Severely Limited | The study shows some intent and effort but lacks rigor. Methodological flaws, unclear writing, or weak argumentation prevent meaningful contribution. Suggest rethinking research design before resubmission. |

| 49 | Incomplete or Underdeveloped | Key components are present, but the work is immature. Citations may be outdated, and the framing is superficial. Could be improved with substantial rewriting and theoretical deepening. |

| 57 | Promising but Problematic | The manuscript poses an interesting question, but significant gaps remain in data interpretation, literature engagement, or structural coherence. Borderline for revision. |

| 60 | Meets Minimum Academic Standards | A publishable idea exists, but the execution is uneven. Acceptable structure, but lacks depth, originality, or clarity. Could move forward with major revisions. |

| 72 | Solid and Competent | A well-organized manuscript with clear methodology and reasonable contribution. Minor improvements to framing, discussion, or engagement with recent literature are recommended. |

| 83 | Strong Contribution | The paper demonstrates originality, rigor, and coherence. Some stylistic polishing or enhanced critical discussion would elevate it further. A few citations may need updating. |

| 85 | Excellent Academic Work | An impressive manuscript that blends clear structure with sound argumentation, relevant literature, and strong methodology. Readable, insightful, and nearly ready for publication. |

| 90 | Outstanding, Minor Tweaks Only | High-quality work that aligns perfectly with RIKI’s editorial standards. Includes original insight, polished writing, and theoretical robustness. Needs only small clarifications or formatting adjustments. |

| 96 | Top-Tier Submission | A publication-ready manuscript that showcases leadership in its field. Methodology, framing, and impact are exceptional. Rarely requires edits. |

| 100 | Model Academic Excellence | Exemplifies the highest standards of academic scholarship. Could serve as a benchmark for others. This level is seldom reached and reserved for truly exceptional work. |

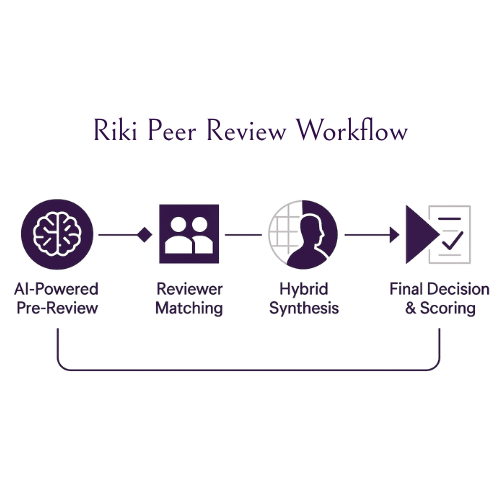

Infographic Concept: RIKI Peer Review Workflow

This infographic will be laid out as a clean, left-to-right timeline that guides the reader through each of the four core stages of the RIKI peer review process. At the top, a bold title bar—framed by a thin dark-purple line—will read “RIKI Peer Review Workflow” in Bellefair, immediately establishing the academic tone. Beneath this, four evenly spaced “milestone” nodes will punctuate the horizontal axis, each accompanied by a short label and icon:

AI-Powered Pre-Review

A simple circle icon containing an abstract AI-brain graphic will sit above the first node. A brief caption beneath will explain that manuscripts undergo structural, linguistic, and citation diagnostics before any human involvement.

Reviewer Matching

The second node will feature a stylized “people” icon inside a small square motif (echoing RIKI’s signature square), signifying the selection of expert reviewers. The caption will note the use of expertise, diversity, and AI reports to ensure fairness.

Hybrid Synthesis

Above the third node, a split-circle icon—half algorithmic grid, half human silhouette—will illustrate the fusion of machine insights and human commentary. A concise legend will describe how AI diagnostics and reviewer feedback merge into a composite assessment.

Final Decision & Scoring

The final node will show a triangular arrow icon (the RIKI triangle), pointing to a stylized “scorecard.” The caption will explain that the editorial board issues a 0–100 score, complete with detailed feedback packets.

A fine, continuous line will connect these four nodes, with subtle arrowheads indicating forward momentum. At each junction, a small RIKI-purple triangle or square will appear as a decorative accent—triangle shapes on upward-pointing arrows, square backgrounds behind node icons—reinforcing brand identity without overwhelming the layout.

Advantages of Our Model Over Traditional Peer Review

1. Rapid Turnaround Times

Traditional peer review can stretch over several months, delaying critical feedback and publication. At RIKI, our hybrid AI–human workflow streamlines initial checks and automates routine tasks, cutting average response times to days rather than weeks. Faster decisions mean authors can iterate more quickly, accelerating the pace of research and discovery.

2. Consistency and Standardization

Human reviewers bring expertise—but they also introduce variability. Our quantitative scoring rubric, combined with AI diagnostics, ensures that every manuscript is evaluated against the same clear criteria. This standardized framework minimizes subjective discrepancies, so authors know their work is judged fairly and uniformly across submissions and disciplines.

3. Enhanced Research Experience

RIKI’s model transforms peer review from a gatekeeping hurdle into a developmental partnership. By offloading clerical checks to AI, reviewers dedicate their time to in-depth critique of originality, methodology, and impact. Authors receive comprehensive, constructive feedback that not only informs editorial decisions but also guides meaningful revisions.

4. Transparency for Authors

Opaque “black-box” reviews leave authors guessing at the rationale behind decisions. RIKI provides full visibility into every step: from the AI-driven pre-screen to individual reviewer comments and composite scores. Clear explanations of each score and criterion empower authors to understand strengths and weaknesses at a glance.

5. Full Access to the Review Report

Authors gain unrestricted access to the entire review dossier—AI summaries, annotated manuscripts, reviewer comments, and editorial rationale. This holistic view helps researchers trace the evolution of their work, identify areas for improvement, and learn best practices directly from the feedback itself.

6. Detailed Scoring by Criterion

Instead of a single “approve” or “reject” verdict, RIKI assigns a 0–100 score with sub-scores for originality, methodology, writing quality, theoretical grounding, ethics, and relevance. This granularity offers precise insight into which aspects of the manuscript excel and which require attention, enabling targeted revisions rather than broad, unfocused rewrites.

7. Opportunity for Response or Appeal

We recognize that peer review is a dialogue, not a monologue. After receiving their report and scores, authors may submit formal responses or appeal specific points. This built-in mechanism fosters constructive exchange, helps resolve misunderstandings, and upholds the integrity of the review process by giving authors a voice in shaping the final decision.

Participation as a Reviewer

Join our global panel of peer reviewers and contribute directly to shaping the future of scholarly publishing. As a RIKI reviewer, you’ll gain early access to cutting-edge research, collaborate with leading academics, and help authors refine their work through your expert insights.

Invitation to Researchers: Become a Reviewer

We invite distinguished researchers and subject-matter experts to join the RIKI reviewer community. Share your expertise, expand your professional network, and play a pivotal role in advancing academic quality. Your contributions will be recognized in our reviewer acknowledgments and on our platform.